AI is everywhere: recommendations on social media, spam filters in email, navigation apps, photo tools, customer support chatbots, and even decisions that affect jobs, loans, or healthcare. Most of the time, AI feels like “smart software.” But behind the scenes, AI can also be unfair, misleading, or invasive—even when nobody intended harm.

- AI ethics in plain English

- What “AI bias” actually means (and how it happens)

- Where you’ll run into AI bias in everyday life

- 1) Feeds, recommendations, and search

- 2) Job filtering and hiring tools

- 3) Lending, insurance, and “risk scores”

- 4) Face recognition and camera-based AI

- 5) Generative AI (chatbots and image tools)

- A practical ethics checklist for users (10 questions)

- How to spot bias in AI outputs

- Privacy & AI: how to protect your data

- Transparency & explanations: what you should expect

- What responsible AI looks like (signals to look for)

- Rules & standards you’ll hear about

- What to do if an AI system harms you

- Key Takeaways

- FAQs

- 1) Is AI bias the same as human bias?

- 2) Can an AI be “perfectly fair”?

- 3) Why do AI tools sound confident even when wrong?

- 4) How can I quickly verify an AI answer?

- 5) Does using AI mean my data will be used for training?

- 6) Are recommendations (like YouTube/TikTok/Instagram) “biased” by design?

- 7) What’s the difference between “fairness” and “accuracy”?

- 8) What should I look for before trusting AI in a serious decision?

- 9) If AI is biased, should we stop using it?

- 10) Where can I learn more without getting overwhelmed?

- Best Artificial Intelligence Apps on Play Store 🚀

- References & further reading

This guide explains AI ethics and bias in plain language, with a user-first focus: how bias happens, where you’ll encounter it, and what you can do to protect yourself—without needing a computer science degree.

AI ethics in plain English

AI ethics is basically the question: “Is this AI being used in a way that is fair, safe, respectful, and accountable to people?”

Ethical AI is not only about “good intentions.” It’s about outcomes. A well-meaning system can still harm people if it’s trained on the wrong data, tested poorly, or deployed without safeguards.

Common ethical principles (the user-friendly version)

- Fairness: The system shouldn’t treat people worse because of who they are (gender, skin tone, language, disability, location, religion, age, etc.).

- Privacy: Your personal data should be protected and not reused in ways you didn’t agree to.

- Transparency: You should know when AI is involved and what it is doing.

- Explainability: In high-stakes decisions, you should be able to get a meaningful explanation and appeal.

- Safety & reliability: The AI should work consistently, avoid dangerous failures, and be monitored for problems.

- Accountability: A real person or organization must be responsible when things go wrong.

Many global frameworks repeat these themes in different words. For example, the OECD AI Principles and UNESCO’s AI ethics recommendation both emphasize human rights, fairness, and transparency.

What “AI bias” actually means (and how it happens)

AI bias means an AI system produces unfair or systematically skewed outcomes—often affecting certain groups more than others.

Important: bias is not always “someone being evil.” Bias often comes from the world the AI learns from.

How bias gets into AI (the main pathways)

- Data imbalance: If the training data contains more of one group than another, the AI may perform better for the majority group.

- Historical bias: If past decisions were biased (hiring, policing, lending), the data “teaches” the AI those patterns.

- Labeling bias: Human labels (what is “toxic,” “professional,” “suspicious”) can reflect subjective judgments.

- Proxy variables: Even if an AI doesn’t use protected attributes directly, it may use close “proxies” (zip code, school, device type) that correlate with them.

- Feedback loops: AI recommends content → people click → AI learns “that’s what people want” → it amplifies the same content, creating echo chambers.

- Deployment mismatch: A model built for one region, language, or demographic may fail when used elsewhere.

Bias isn’t one thing—there are different kinds

- Representation bias: Who is included (or missing) in the data?

- Measurement bias: Are we measuring the right thing, or a flawed proxy?

- Outcome bias: Do errors fall more heavily on some groups than others?

- Interaction bias: Does the product design cause certain users to be misunderstood or ignored?

If you want a deeper (but still accessible) look at fairness concepts, Google’s What-If Tool has a helpful “playing with fairness” explainer.

Where you’ll run into AI bias in everyday life

You don’t need to use “enterprise AI” to be impacted. Bias can show up in everyday tools:

1) Feeds, recommendations, and search

Algorithms decide what you see, what goes viral, and what “truth” feels like. Bias here can amplify stereotypes, misinformation, or extreme content because it drives engagement.

2) Job filtering and hiring tools

Resume screeners and video interview tools can disadvantage candidates based on wording, accent, career breaks, disability, or nontraditional backgrounds—especially if the system learns from historical hiring patterns.

3) Lending, insurance, and “risk scores”

AI-driven credit decisions can be influenced by proxies for income, neighborhood, or education, which may unfairly impact certain communities.

4) Face recognition and camera-based AI

Research has documented performance gaps across demographics in some commercial systems, raising concerns about real-world harm when used in high-stakes settings.

5) Generative AI (chatbots and image tools)

Generative AI can reflect biased stereotypes, present confident misinformation, or produce uneven quality across languages and cultures. It can also “sound authoritative” even when it’s guessing.

Frameworks like the NIST AI Risk Management Framework exist because these harms aren’t theoretical—they’re recurring patterns.

A practical ethics checklist for users (10 questions)

Before you rely on an AI tool—especially for money, health, legal, or reputation decisions—ask these:

- What is this AI used for? Is it decision-making, or just suggestions?

- What could go wrong? Who gets harmed if it’s wrong?

- Who is accountable? Is there a real company/contact and a complaint path?

- Does it disclose AI use? Are you clearly told when AI is involved?

- Can you opt out? Can you choose a human alternative or disable AI features?

- What data does it collect? Does it need that data to function?

- How is your data stored and used? Retention, sharing, training use, and deletion options.

- Is it tested for fairness? Any published evaluation, audits, or documentation?

- Does it work well in your language and context? Especially important for non-dominant languages and dialects.

- Can you appeal or correct it? Can you fix wrong information or decisions?

For high-impact systems, the White House Blueprint for an AI Bill of Rights is a useful “what people deserve” checklist—covering safety, privacy, notice, and alternatives.

How to spot bias in AI outputs

Even if you can’t inspect the model, you can still test the experience.

Red flags users should watch for

- Different quality for different people: The tool works well for one group or accent but fails for others.

- Stereotypes and assumptions: It assigns roles, traits, or “likely behavior” based on identity markers.

- Overconfidence without sources: Strong claims with no evidence or citations.

- One-way decisions: No explanation, no appeal, no correction process.

- Patterned mistakes: Same type of user gets misclassified repeatedly.

Simple bias tests you can do (in minutes)

- Counterfactual test: Ask the same question but swap identity details (e.g., “he” vs “she,” different names, different locations). Do answers change unfairly?

- Language test: Try your preferred language/dialect. Does the tool become less accurate or more dismissive?

- Edge-case test: Provide a scenario outside the “typical user.” Does it fail safely or confidently hallucinate?

- Source test: Ask: “What sources support that claim?” If it can’t provide any, treat it as unverified.

If you’re curious how researchers formalize transparency, look up Model Cards and Datasheets for Datasets—both aim to document limitations and performance across groups.

Privacy & AI: how to protect your data

Many AI tools improve by collecting interaction data. That’s not automatically bad—but you should stay in control.

Practical privacy rules (especially for chatbots)

- Don’t paste sensitive info: passwords, OTPs, Aadhaar/SSN, bank details, private medical reports, private contracts.

- Assume prompts can be stored: Unless the provider clearly says otherwise (and offers controls).

- Be careful with “upload a file” features: Treat uploads as sharing data, not “thinking privately.”

- Use separate accounts when possible: Keep personal and professional contexts separated.

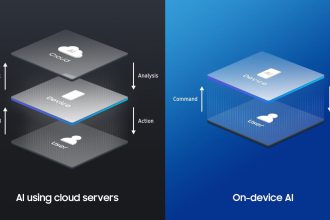

- Prefer local/on-device options for sensitive tasks: When feasible, on-device processing can reduce data exposure.

Regulatory frameworks are increasingly emphasizing transparency and risk controls around AI data use. The EU AI Act overview and its official legal text on EUR-Lex are worth bookmarking if you want to understand how policy is evolving.

Transparency & explanations: what you should expect

Transparency isn’t just a “nice-to-have.” It’s the difference between being informed and being manipulated.

At minimum, users should expect

- Disclosure: Clear notice that AI is being used (not hidden behind vague labels).

- Purpose: What the AI is doing (ranking, filtering, predicting, generating).

- Limits: Known failure modes and where it should not be used.

- Controls: Ability to edit inputs, correct outcomes, or opt out.

In high-stakes situations, you should expect more

- Meaningful explanation: Not technical jargon—an understandable reason for the outcome.

- Appeal / contestability: A way to challenge and review the decision.

- Human fallback: A human review path when the AI decision matters.

The UK’s policy discussion emphasizes principles like transparency, fairness, accountability, and contestability in its AI regulation white paper.

What responsible AI looks like (signals to look for)

You can often tell whether a product team takes ethics seriously by what they publish and what controls they provide.

Green flags (good signs)

- Documentation: Model cards, safety notes, known limitations, intended use cases.

- Bias testing: Mentions of fairness evaluation and ongoing monitoring.

- Clear policies: How data is used, retained, and deleted.

- Reporting channels: Easy ways to report harmful outputs and get responses.

- Governance: A visible process for risk management, audits, and accountability.

Frameworks and standards you may see referenced

- NIST AI Risk Management Framework (AI RMF) (practical risk management guidance)

- NIST AI RMF 1.0 (PDF)

- ISO/IEC 42001 (AI management systems standard)

- IEEE Ethically Aligned Design (PDF)

- Partnership on AI (industry + civil society guidance)

If you’re technical (or your team is), open-source toolkits like Fairlearn and IBM AIF360 focus on measuring and mitigating unfairness. Even as a user, it’s a good sign if a company references real methods—not just marketing words.

Rules & standards you’ll hear about

Note: This section is educational, not legal advice. AI regulation differs by country, but some major initiatives shape global expectations:

- OECD AI Principles (2019): Global, values-based guidance adopted by many countries. See OECD overview and the official recommendation.

- UNESCO Recommendation (2021): A global AI ethics standard emphasizing human rights and dignity. See UNESCO explainer and the full Recommendation text.

- EU AI Act: A risk-based regulatory framework for AI in the EU. Start with the EU overview and the official legal text (EUR-Lex).

- US AI Bill of Rights (Blueprint): Non-binding guidance outlining protections people should expect. Read the official publication.

- UK pro-innovation approach: A principles-based approach via existing regulators. See UK overview.

Even if you’re not in these regions, these frameworks influence how global products behave—especially big platforms that operate internationally.

What to do if an AI system harms you

If you believe an AI system treated you unfairly or caused harm, don’t just “accept it.” You can respond strategically.

Step-by-step action plan

- Document everything: screenshots, dates, prompts, outputs, and any explanations given.

- Request an explanation: ask how the decision was made and what data influenced it.

- Ask for a human review: especially for high-impact outcomes (accounts, payments, hiring, lending).

- Correct inaccurate data: if the system used wrong information, request correction and re-evaluation.

- Use official reporting channels: product support, abuse reporting, or transparency portals.

- Escalate if needed: consumer protection bodies, data protection authorities, or relevant regulators in your region.

As a user, your biggest leverage is paper trail + clarity. The more precise your evidence, the harder it is for organizations to dismiss you.

Key Takeaways

- Bias is often a data and design problem—not always malicious intent—but the harm is still real.

- High-stakes AI should be explainable and contestable: you deserve notice, reasons, and a path to appeal.

- Test AI like you test any tool: run quick counterfactual checks and demand sources for strong claims.

- Protect your privacy: avoid sharing sensitive information and understand retention/training policies.

- Look for signals of responsibility: documentation, risk management, audits, and user controls.

FAQs

1) Is AI bias the same as human bias?

They’re related, but not identical. AI often amplifies human bias because it learns from historical data and then applies it at scale. That’s why small skews can turn into big real-world impact.

2) Can an AI be “perfectly fair”?

In many real-world cases, fairness involves trade-offs. Different definitions of fairness can conflict, and values differ across cultures and contexts. The goal is to reduce harm, improve equity, and ensure accountability.

3) Why do AI tools sound confident even when wrong?

Many generative models are optimized to produce fluent text. Fluency is not the same as truth. As a user, treat outputs like “drafts” until verified—especially for medical, legal, or financial topics.

4) How can I quickly verify an AI answer?

Ask for sources, cross-check with at least two reputable references, and look for primary sources (official sites, standards bodies, peer-reviewed papers). If it can’t cite anything, treat it as unverified.

5) Does using AI mean my data will be used for training?

Not always—but it depends on the provider and your settings. Read the privacy policy, look for opt-outs, and assume anything you share could be stored unless stated otherwise.

6) Are recommendations (like YouTube/TikTok/Instagram) “biased” by design?

They’re optimized for engagement. That can create systematic skews—toward sensational content, echo chambers, or stereotypes—because those patterns often keep people watching.

7) What’s the difference between “fairness” and “accuracy”?

Accuracy is overall correctness. Fairness asks whether errors and outcomes are distributed equitably across groups. A system can be “accurate overall” yet harmful to a minority group.

8) What should I look for before trusting AI in a serious decision?

Disclosure, human oversight, appeal process, published limitations, privacy controls, and evidence of testing for bias and safety. If the tool is a black box with no recourse, be cautious.

9) If AI is biased, should we stop using it?

Not necessarily. The better question is: Is it being used responsibly? Some AI systems reduce human error and expand access—if risk-managed, monitored, and constrained.

10) Where can I learn more without getting overwhelmed?

Start with the high-level frameworks and summaries in the References below—then go deeper only into the parts you care about (privacy, fairness, regulation, or safety).

Best Artificial Intelligence Apps on Play Store 🚀

Learn AI from fundamentals to modern Generative AI tools — pick the Free version to start fast, or unlock the full Pro experience (one-time purchase, lifetime access).

AI Basics → Advanced

Artificial Intelligence (Free)

A refreshing, motivating tour of Artificial Intelligence — learn core concepts, explore modern AI ideas, and use built-in AI features like image generation and chat.

More details

► The app provides a refreshing and motivating synthesis of AI — taking you on a complete tour of this intriguing world.

► Learn how to build/program computers to do what minds can do.

► Generate images using AI models inside the app.

► Clear doubts and enhance learning with the built-in AI Chat feature.

► Access newly introduced Generative AI tools to boost productivity.

- Artificial Intelligence- Introduction

- Philosophy of AI

- Goals of AI

- What Contributes to AI?

- Programming Without and With AI

- What is AI Technique?

- Applications of AI

- History of AI

- What is Intelligence?

- Types of Intelligence

- What is Intelligence Composed of?

- Difference between Human and Machine Intelligence

- Artificial Intelligence – Research Areas

- Working of Speech and Voice Recognition Systems

- Real Life Applications of AI Research Areas

- Task Classification of AI

- What are Agent and Environment?

- Agent Terminology

- Rationality

- What is Ideal Rational Agent?

- The Structure of Intelligent Agents

- Nature of Environments

- Properties of Environment

- AI – Popular Search Algorithms

- Search Terminology

- Brute-Force Search Strategies

- Comparison of Various Algorithms Complexities

- Informed (Heuristic) Search Strategies

- Local Search Algorithms

- Simulated Annealing

- Travelling Salesman Problem

- Fuzzy Logic Systems

- Fuzzy Logic Systems Architecture

- Example of a Fuzzy Logic System

- Application Areas of Fuzzy Logic

- Advantages of FLSs

- Disadvantages of FLSs

- Natural Language Processing

- Components of NLP

- Difficulties in NLU

- NLP Terminology

- Steps in NLP

- Implementation Aspects of Syntactic Analysis

- Top-Down Parser

- Expert Systems

- Knowledge Base

- Inference Engine

- User Interface

- Expert Systems Limitations

- Applications of Expert System

- Expert System Technology

- Development of Expert Systems: General Steps

- Benefits of Expert Systems

- Robotics

- Difference in Robot System and Other AI Program

- Robot Locomotion

- Components of a Robot

- Computer Vision

- Application Domains of Computer Vision

- Applications of Robotics

- Neural Networks

- Types of Artificial Neural Networks

- Working of ANNs

- Machine Learning in ANNs

- Bayesian Networks (BN)

- Building a Bayesian Network

- Applications of Neural Networks

- AI – Issues

- A I- Terminology

- Intelligent System for Controlling a Three-Phase Active Filter

- Comparison Study of AI-based Methods in Wind Energy

- Fuzzy Logic Control of Switched Reluctance Motor Drives

- Advantages of Fuzzy Control While Dealing with Complex/Unknown Model Dynamics: A Quadcopter Example

- Retrieval of Optical Constant and Particle Size Distribution of Particulate Media Using the PSO-Based Neural Network Algorithm

- A Novel Artificial Organic Controller with Hermite Optical Flow Feedback for Mobile Robot Navigation

Tip: Start with Free to build a base, then upgrade to Pro when you want projects, tools, and an ad-free experience.

One-time • Lifetime Access

Artificial Intelligence Pro

Your all-in-one AI learning powerhouse — comprehensive content, 30 hands-on projects, 33 productivity AI tools, 100 image generations/day, and a clean ad-free experience.

More details

Unlock your full potential in Artificial Intelligence! Artificial Intelligence Pro is packed with comprehensive content,

powerful features, and a clean ad-free experience — available with a one-time purchase and lifetime access.

- Machine Learning (ML), Deep Learning (DL), ANN

- Natural Language Processing (NLP), Expert Systems

- Fuzzy Logic Systems, Object Detection, Robotics

- TensorFlow framework and more

Pro features

- 500+ curated Q&A entries

- 33 AI tools for productivity

- 30 hands-on AI projects

- 100 AI image generations per day

- Ad-free learning environment

- Take notes within the app

- Save articles as PDF

- AI library insights + AI field news via linked blog

- Light/Dark mode + priority support

- Lifetime access (one-time purchase)

Compared to Free

- 5× more Q&As

- 3× more project modules

- 10× more image generations

- PDF + note-taking features

- No ads, ever • Free updates forever

Buy once. Learn forever. Perfect for students, developers, and tech enthusiasts who want to learn, build, and stay updated in AI.

References & further reading

- NIST: AI Risk Management Framework (AI RMF)

- NIST AI RMF 1.0 (PDF)

- OECD AI Principles overview

- OECD legal instrument: Recommendation on AI

- UNESCO: Recommendation on the Ethics of AI

- UNESCO Recommendation text

- EU: AI Act overview and timeline

- EUR-Lex: Regulation (EU) 2024/1689 (AI Act)

- US: Blueprint for an AI Bill of Rights (official publication)

- UK: AI regulation white paper

- ISO/IEC 42001: AI management systems

- IEEE: Ethically Aligned Design (PDF)

- Model Cards for Model Reporting (arXiv)

- Datasheets for Datasets (arXiv)

- Partnership on AI

- What-If Tool (fairness exploration)

Disclaimer: This article is for educational purposes and does not provide legal or professional advice.